ORIGINALLY PUBLISHED IN OCTOBER, SPRING 2003.

•1.

Fredric Jameson does not like predictions. His is an owlish and retrospective Marxism, one that happily foregoes the crystal ball of some former orthodoxy. There is a Hegelian lesson that Jameson’s writing repeatedly attempts to impart, which is that wisdom only comes in the backwards glance, that we glimpse history only in the moment when our plans fail or dialectically backfire, when our actions bump up against the objective, hurtful (but never foreseeable) limits of the historical situation. You can draw up your revolutionary schemes, paint the future as gaily or grimly as you like, but only upon review will it become plain in just what way you have been Reason’s dupe. If this point is unclear, you might consider Jameson’s response to the World Trade Center attacks, which began with the following extraordinary observation: “I have been reluctant to comment on the recent ‘events’ because the event in question, as history, is incomplete and one can even say that it has not yet fully happened. … Historical events…are not punctual, but extend in a before and after of time which only gradually reveal themselves.”[1] I suspect many will find remarkable Jameson’s reluctance here to help shape the public response to September 11th. An event that has not fully happened yet is, after all, an event in which one may yet intercede, an event that one needn’t yet cede to the Right, an event to which one might yet attribute one’s own polemical and political meanings. But Jameson makes a conspicuous display here of spurning what Left criticism generally (and glibly) calls an “intervention”—as though the business of a Marxist criticism were not to intervene, but rather to bide its time, to wait until an event has been thoroughly mediated or disclosed its function, and then to identify, with the serene impotence of hindsight, history’s great game. Any event is, like revolution itself, a leap into the unknown. The owl of Minerva only flies in November.

One might wonder, then, how Jameson feels about his own writing, which has been so accidentally and accurately predictive. How does he feel, for instance, about his landmark postmodernism essay, the one that sometimes goes by the name “Postmodernism and Consumer Society”?[2] That article so neatly anticipated U.S. popular culture in the 1990s that it is hard to shake the feeling that a whole generation of artists—writers, musicians, filmmakers above all—must have mistaken it for a manifesto. (“Pastiche—check. Death of the subject—you bet. Depthlessness and disorientation—where do I sign up?”) As ridiculous as it may sound, the essay, first published in 1983, now reads like an exercise in cultural embryology, discerning the first, fetal traces of an aesthetic mode that would become fully evident only in the years that followed. One wonders, too, if young readers encountering the article for the first time now don’t therefore underestimate its savvy. One wonders if they don’t find it rather trite, since a sharp-eyed exegesis of Body Heat (1981) is really just a workaday description of L.A. Confidential (1997)—a script treatment.

We can be more precise: What has seemed so strangely prophetic about Jameson’s postmodernism argument are, oddly enough, its Benjaminian qualities. Benjamin’s fingerprints seem, in some complicated way, to be all over postmodernism. One might even say that postmodernism in America is a dismal parody of Benjaminian thought. Just cast an eye back over the last ten years, over U.S. pop culture on the cusp of the millennium—postmodernism post-Jameson. Consider, for instance, the apocalypticism that has been among its most persistent trends. The recent fin de siècle has been preoccupied with dire images of a devastated future: we might think here of the full-blown resurgence of millenarian thought and the orchestrated panic surrounding the millennium bug; of X-Files paranoia, which has told us to “fight the future”; of catastrophe movies and the resurgence of film noir and dystopian science fiction. If you were to design a course on popular culture in the 1990s, you would be teaching a survey in doom.

There is much in this culture of disaster that would merit our closest attention—there is, in fact, strangeness aplenty. Consider, for instance, the emergence as a genre of the Christian fundamentalist action thriller, the so-called rapture novel. These novels are basically an exercise in genre splicing; they begin by offering, in what for right-wing Protestantism is a fairly ordinary procedure, prophetic interpretations of world events—the collapse of the Soviet Union, the new Intifada—but they then graft onto these biblical scenarios plots borrowed from Tom Clancy techno-thrillers. The first thing that needs to be noted about rapture novels, then, is that they signal, on the part of U.S. fundamentalism, an unprecedented capitulation to pop culture, which the godly Right had until recently held in well-nigh Adornian contempt. Older forms of Christian mass culture have seized readily on new technologies—radio, say, or cable television—but they have tended to recreate within those media a gospel or revival-show aesthetic. In rapture novels, by contrast, as in the rapture movies that have followed in the novels’ wake, we are able to glimpse the first outlines of a fully commercialized, fully mediatized Christian blockbuster culture. Fundamentalist Christianity gives way at last to commodity aesthetics.

This is not yet to say enough, however, because this rapprochement inevitably holds surprises for secular and Christian audiences alike. The best-selling rapture novel to date is Jerry Jenkins and Timothy LaHaye’s Left Behind, which has served as a kind of template for the entire genre. In the novel’s opening pages, the indisputably authentic Christians are all called up to Christ—they are “raptured.” They literally disappear from earth, leaving their clothes pooled on the ground behind them, pocket change and car keys scattered across the pavement. This scene is the founding convention of the genre, the one event that no rapture novel can do without. And yet this mass vanishing, conventional though it may be, cannot help but have some curious narrative consequences. It means, for a start, that the typical rapture novel is not interested in good Christians. The heroes of these stories, in other words, are not godly people—this is true by definition, because the real Christians have all quit the scene; they have been vacuumed from the novel’s pages. In their absence, the narrative turns its attention to indifferent or not-quite Christians, who can be shown now snapping out of their spiritual ennui, rallying to God, and taking up the fight against the anti-Christ (who in Left Behind, takes the form of an Eastern European humanitarian whose malign plans include scrapping the world’s nuclear arsenals and feeding malnourished children). Left Behind, I would go so far as to suggest, seems to work on the premise that there is something better—something more significantly Christian—about bad Christians than there is about good ones. This notion has something to do with the role of women in the novel. Left Behind, it turns out, has almost no use for women at all. They all either disappear in the novel’s opening pages or get left behind and metamorphose into the whores of anti-Christ. It will surprise no-one to find a Christian fundamentalist novel portraying women as whores, but the former point is worth dwelling on: Left Behind cannot wait to dispense with even its virtuous women. It may hate the harlots, but it has no use for ordinary church-supper Christians either, imagined here as suburban housewives and their well-behaved young children. Anti-Christ has to be defeated at novel’s end, and for this to happen, the good Christians have to be shown the door, for smiling piety can, in the novel’s terms, sustain no narrative interest; it can enter into no conflicts. Left Behind is premised on the notion that devout Christians are cheek-turning wimps and goody-two shoes, mere women, in which case they won’t be much good in the fight against the liberals and the Jews. What this means is that the protagonists who remain in the novel—the Christian fence-sitters—are all men, and not just any men, but rugged men with rugged, porn-star names: Rayford Steele, Buck Williams, Dirk Burton. Left Behind is a novel, in other words, that envisions the remasculinization of Christianity, that calls upon its readers to imagine a Christianity without women, but with muscle and grit instead, a Christianity that can do more than just bake casseroles for people. And such a project, of course, requires bad Christians so that they may become bad-ass Christians. Perhaps it goes without saying: A Christian action thriller is going to be interested first and foremost in action-thriller Christians.

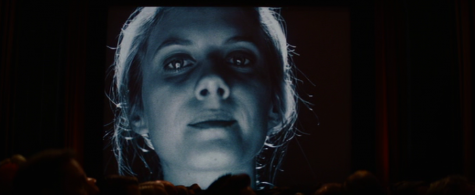

It is with the film version of Left Behind (2001), however, that things really get curious. The film’s final moments nearly make explicit a feature of the narrative that is half-buried in the novel: The film concludes with a brief sequence that we’ve all seen a dozen times, in a dozen different action movies—the sequence, that is, in which the heroic husband returns home from his adventures to be reunited with his wife and child. Typically, this scene is staged at the front door of the suburban house with the child at the wife’s side; you might think, emblematically, of the final shots of John Woo’s Face/Off (1997), which show FBI Agent Sean Archer (John Travolta) exchanging glances with his wife (Joan Allen) over the threshold as their teenaged daughter hovers in the background. Left Behind, for its part, reproduces that scene almost exactly, almost shot for shot, except, since the women have all evaporated or gone over to anti-Christ, the film has no choice but to stage this familiar ending in an unfamiliar way—between its male heroes, between Rayford Steele, standing in the doorway with his daughter, and a bedraggled Buck Williams, freshly returned from his battles with the Beast. A remasculinized Christianity, then, cannot help but imagine that the perfect Christian family would be—two men. Such, then, is one upshot of fundamentalism’s new openness to pop culture: Christianity uncloseted.

Of course, the borrowings can go in the other direction as well. Secular apocalypse movies can deck themselves out in religious trappings, but when they do so, they risk an ideological incoherence of their own. Think first about conventional, secular catastrophe movies—Armegeddon (1998), Deep Impact (1998), Volcano (1997)—so-called apocalypse films that actually make no reference to religion. These tend to be reactionary in rather humdrum and technocratic ways, full of experts and managers deploying the full resources of the nation to fend off a threat defined from the outset as non-ideological. The volcanoes and earthquakes and meteors that loom over such movies are therefore merely more refined versions of the maniacal terrorists and master thieves who normally populate action movies: they are enemies of the state whose challenge to the social order never approaches the level of the political. It is when such secular narratives reintroduce some portion of religious imagery, however, that their political character becomes pronounced. We might think here of The Seventh Sign (1988), which featured Demi Moore, or of the Arnold Schwarzenegger vehicle End of Days (1999). Like Left Behind, these last two films work by combining biblical scenarios and disaster-movie conventions, and the results are similarly confusing. To be more precise, they begin by offering luridly Baroque versions of the Christian apocalypse narrative, but then revert back to the secular logic of the disaster movie, as though to say: Catastrophes are destabilizing a merciless world in preparation for Christ’s return—and this must be stopped! In a half-hearted nod to Christian ethics, each of these movies begins by depicting the world of global capitalism as brutal and unjust—the montage of squalor has become something of an apocalypse-movie cliché—before deciding that this world must be preserved at all costs. The characters in these films, in other words, expend their entire allotment of action-movie ingenuity trying to prevent the second coming of Christ, imagined here as the biggest disaster of all.[3]

This is not to say that contemporary American apocalypses dispense with redemptive imagery altogether, at least of some worldly kind. Carceral dystopias, for instance, films that work by trapping their characters in controlled and constricted spaces, tend to posit some utopian outside to their seemingly total systems: the characters in Dark City (1997) dream of Shell Beach, the fictitious seaside resort that supposedly lies just past their nightmarish noir metropolis, the illusory last stop on a bus line that actually runs nowhere; the man-child of Peter Weir’s Truman Show (1998) dreams, in similar ways, of Fiji, which is a rather more conventional vision of oceanic bliss; and the Horatio-Alger hero of the genetics dystopia Gattaca (1997) follows this particular utopian logic to its furthest end by dreaming of the day he will be made an astronaut, the day he will fly to outer space, which of course is no social order at all, let alone a happier one, but merely an anything-but-here, an any-place-but-this-place, the sheerest beyond. As utopias go, then, these three are remarkably impoverished; they cannot help but seem quaint and nostalgic, strangely dated, like the daydreams of some Cold-War eight-year old, all Coney Island and Polynesian hula-girls and John-Glenn, shoot-the-moon fantasies.

But then it is precisely the old-fashioned quality of these utopias that is most instructive; it is precisely their retrograde quality that demands an explanation. For if on the one hand, U.S. pop culture has seemed preoccupied with the apocalypse, on the other hand it has seemed every bit as obsessed with cheery images from a sanitized past. Apocalypse culture has as its companion the many-faceted retro-craze: vintage clothing; Nick at Nite; the ‘70s vogue; the ‘50s vogue; the ‘40s vogue; the ‘30s vogue; the ‘20s vogue (the ‘60s are largely missing from this tally, for reasons too obvious to enumerate; the ‘60s vogue has been stunted, almost nonexistent, at least within a U.S. framework—retro tops out about 1963 and then gets shifted over to Europe and the mods); the return of surf, lounge-music, and Latin jazz; retro-marketing and retro-design, and especially the Volkswagen Beetle and the PT Cruiser.

Retro, then, deserves careful consideration of its own, as an independent phenomenon alongside the apocalypse. Some careful distinctions will be necessary. Retro takes a hundred different forms; it has the appearance of a single and coherent phenomenon only at a very high level of generality. We could begin, then, by examining the heavily marketed ‘60s and ‘70s retro of mainstream, white youth culture. Here we would want to say, at least on first pass, that the muffled camp of Austin Powers (1997), say—or the mid-‘90s Brady Bunch revival, or Beck’s Midnite Vultures—closely approximates Jameson’s notion of postmodern pastiche: this is retro as blank parody, the affectless recycling of alien styles, worn like so many masks. But that said, we would have to counterpose against these examples the retro-culture of a dozen regional scenes, scattered across the U.S., most of which are retro in orientation, but none of which are exercises in pastiche exactly. Take, for instance, the rockabilly and honky-tonk scene in Chapel Hill, North Carolina: It is impeccably retro in its musical choices and impeccably retro in its fashions, full of redneck hipsters sporting bowling shirts and landing-pad flattops and smart-alecky tattoos. Theirs is a form of retro whose reference points are emphatically local, and in its regionalism, the Chapel Hill scene aspires to a subculture’s subversiveness, a kind of Southern-fried defiance, which stakes its ground in contradistinction to some perceived American mainstream and then gives its rebellion local color, as though to say: “We don’t work in your airless (Yankee) offices. We don’t speak your pinched (Yankee) speech. We don’t belong to your emasculated (Yankee) culture. We are hillbillies and punks in equal proportion.” Retro, in short, can be placed in the service of a kind of spitfire regionalism, and there is little to be gained by simply conflating this form of retro with the retro-culture marketed nationwide.

In fact, even mainstream ‘70s retro can take on different valences in different hands. To cite just one further example: hip-hop sampling, which builds new tracks out of the recycled fragments of existing recordings, might seem upon first inspection to be the very paradigm of the retro-aesthetic. And yet hip-hop, which has mined the ‘70s funk back-catalog with special diligence, typically forgoes the irony that otherwise accompanies such postmodern borrowings. Indeed, hip-hop sampling generally involves something utterly unlike irony; it is often positioned as a claim to authenticity, an homage to the old school, so that when OutKast, say, channels some vintage P-Funk, that sample is meant to function as a genetic link, a reoccurring trait or musical cell-form. The sample is meant to serve as a tangible connection back to some originary moment in the history of soul and R&B (or funk and disco).[4]

So differences abound in retro. And yet one is tempted, all the same, to speak of something like an official retro-culture, which takes as its object the 1940s and ‘50s: diners, martinis, “swing” music (which actually refers, not to ‘30s and ‘40s swing, but to post-war jump blues), industrial-age furniture, late-deco appliances, all chrome and geometry. The most important point to be made about this form of retro is that it is an unabashedly nationalist project; it sets out to create a distinctively U.S. idiom, one redolent of Fordist prosperity, an American aesthetic culled from the American century, a version of Yankee high design able to compete, at last, with its vaunted European counterparts. In general, then, we might want to say that retro is the form that national tradition takes in a capitalist culture: Capitalism, having liquidated all customary forms of culture, will sell them back to you at $16 a pop. But then commodification has ever been the fate of national customs, which are all more or less scripted and inauthentic. What is distinctive about retro, then, is the class of objects that it chooses to burnish with the chamois of tradition. There is a remarkable scene near the beginning of Jeunet and Caro’s great retro-film Delicatessen (1991) that is instructive in this regard: Two brothers sit in a basement workshop, handcrafting moo-boxes—those small, drum-shaped toys that, once upended and then set right again, low like sorrowful cows. The brothers grind the ragged edges from the boxes, blow away the shavings as one might dust from a favorite book, rap the work-table with a tuning fork and sing along with the boxes to ensure the perfect pitch of the heifer’s bellow. And in that image of their care, their workman’s pride, lies one of retro-culture’s great fantasies: Retro distinguishes itself from the more or less folkish quality of most national traditions in that it elevates to the status of custom the commodities of early mass production—old Coke bottles, vintage automobiles—and it does so by imbuing them with artisanal qualities, so that, in a strange historical inversion, the first industrial assembly lines come to seem the very emblem of craftsmanship. Retro is the process by which mass-produced trinkets can be reinvented as “heritage.”[5]

The apocalypse and the retro-craze—such, then, are the twin poles of postmodernism, at least on Jameson’s account. We are all so accustomed to this twosome that it has become hard to appreciate what an odd juxtaposition it really is. Disco inferno, indeed. This is a pairing, at any rate, that finds a rather precise corollary in the writings of Walter Benjamin. Each of the moments of our swinging apocalypse can be traced back to Benjaminian impulses, or opens itself, at least, to Benjaminian description. For in what other thinker are we going to find, in a manner that so oddly approximates the culture of American malls and American multiplexes, this combination of millenarian mournfulness and antiquarian devotion? Benjamin’s Collector seems to preside over postmodernism’s thrift-shop aesthetic, just as surely as its apocalyptic imagination is overseen by Benjamin’s Messiah, or at least by his Catastrophic Angel. It would seem, then, that Benjaminians should be right at home in postmodernism, and if this is palpably untrue—if the culture of global capitalism does not after all seem altogether hospitable to communists and the Kabbalah—then this is something we will now have to account for. Why, despite easily demonstrated affinities, does it seem a little silly to describe U.S. postmodernism as Benjaminian?

Jameson’s work is again clarifying. It is not hard to identify the Benjaminian elements in Jameson’s idiom, and especially in his utopian preoccupations, his determination to make of the future an open and exhilarating question. No living critic has done more than Jameson to preserve the will-be’s and the could-be’s in a language that would just as soon dispense altogether with its future tenses and subjunctive moods. And yet a moment’s reflection will show that Jameson is, for all that, the great anti-Benjaminian. It is Jameson who has taught us to experience pop culture’s Benjaminian qualities, not as utopian pledges, but as threats or calamities. Thus Jameson on apocalypse narratives: “It seems to be easier for us today to imagine the thoroughgoing deterioration of the earth and of nature than the breakdown of late capitalism; perhaps that is due to some weakness in our imaginations. I have come to think that the word postmodern ought to be reserved for thoughts of this kind.”[6] It is worth calling attention to the obvious point about these sentences—that Jameson here more or less equates postmodernism and apocalypticism—if only because in his earliest work on the subject, it is not the apocalypse but retro-culture that seems to be postmodernism’s distinguishing and debilitating mark. Again Jameson: “there cannot but be much that is deplorable and reprehensible in a cultural form of image addiction which, by transforming the past into visual mirages, stereotypes, or texts, effectively abolishes any practical sense of the future and of the collective project.”[7] Jameson, in short, is most sour precisely where Benjamin is most expectant. He would have us turn our back on the most conspicuous features of Benjamin’s work; for late capitalism, it would seem, far from keeping faith with Benjamin, actually robs us of our Benjaminian tools, if only by generalizing them, by transforming them into noncommittal habits or static conventions: the Collector, fifty years on, shows himself to be just another fetishist, and even the Angel of History turns out to be a predictable and anti-utopian figure, unable to so much as train its eyes forward, foreclosing, without reprieve, on the time yet to come. U.S. postmodernism may be a culture that loves to “brush history against the grain,” but only in the way that you might brush back your ironic rockabilly pompadour.

•2.

But what if we refused to break with Benjamin in this way? Try this, just as an exercise: Ask yourself what these seemingly disparate trends—apocalypticism and the retro-craze—have to do with one another. Consider in particular that remarkable crop of recent films that actually unite these two trends, films that ask us to imagine an unlivable future, but do so in elegant vintage styles. These include: Ridley Scott’s Blade Runner (1982), the grand-daddy of the retro-apocalypses; three oddly upbeat dystopias—Starship Troopers and the aforementioned Gattaca and Dark City—all box-office underachievers from 1997; and, again, the cannibal slapstick Delicatessen. All of these films posit, in their very form, some profound correlation between retro and the apocalypse, but it is hard, on a casual viewing, to see what that correlation could be. Jameson, of course, offers a clear and compelling answer to this question, which is that apocalypticism and the retro-craze are the Janus faces of a culture without history, two eyeless countenances, pressed back to back, facing blankly out over the vistas they cannot survey.[8]

Some of these films, it must be noted, seem to invite a Jamesonian account of themselves. This is true of Blade Runner, for instance, or of The Truman Show—films that offer a vision of retro-as-dystopia, a realm of fabricated memory, in which history gets handed over to corporate administration, in which every madeleine is stamped “Made in Malaysia.” Perhaps it is worth pausing here, however, since we need to be wary of running these two films together. The contrast between them is actually quite revealing. Both Blade Runner and The Truman Show present retro-culture as dystopian, and in order to do this, both rely on some of the basic conventions of science fiction. Think about what makes science fiction distinctive as a mode—think, that is, about what distinguishes it from those genres with which it seems otherwise affiliated, such as the horror movie. Horror movies, especially since the 1970s, have typically worked by introducing some terrifying, unpredictable element into apparently safe and ordinary spaces. Monsters are nearly always intruders—slashers in the suburbs, zombies forcing their way past the barricaded door. But dystopian science fiction is, in this respect, nearly the antithesis of horror. It does not depict a familiar setting into which something frightening then gets inserted. What is frightening in dystopian science fiction is rather the setting itself. Now, this point holds for both Blade Runner and The Truman Show, but it holds in rather different ways. The first observation that needs to be made about The Truman Show is that it is more or less a satire, which is to say that, though it takes retro as its object, it is not itself a retro-film. It portrays a world that has handed itself over entirely to retro, a New Urbanist idyll of gleaming clapboard houses on mixed-use streets; but the film itself is not, by and large, retro in its narrative forms or cinematic techniques. Quite the contrary: the film wants to teach its viewers how to read retro in a new way; it wishes, polemically, to loosen the hold of retro upon them. The Truman Show takes a setting that initially seems like some American Eden, and then through the menacing comedy of its mise-en-scène—the falling lights and incomplete sets, the scenery that Truman stumbles upon or that springs disruptively to life—makes this retro-town come slowly to seem ominous. To give the film the cheap Lacanian description it is just begging for: The Truman Show charts the unraveling of the symbolic order. Every klieg light that comes crashing down from the sky is a warning shot fired from the Real. The simpler point, however, is that The Truman Show rests on a deflationary argument about American mass culture—a media-governed retro-culture depicted here as restrictive, counterfeit, and infantilizing—and its form is accordingly rather conventional. It is essentially a cinematic Bildungsroman, which ends once the protagonist steps forward to take full responsibility for his own life, and this, of course, tends to compromise the film’s own Lacanian premise: It suggests that any of us could simply step out of the symbolic order, step boldly out into the Real, if only we could muster sufficient resolve.[9]

Having a compromised and conventional form, however, is not the same thing as having a retro-form. In Blade Runner, by contrast, the setting—a dismal and degenerate Los Angeles—is self-evidently dystopian, but it is itself retro; it is retro as a matter of style or form. The film’s vision of L.A., as has often been observed, is equal parts Metropolis and ‘40s film noir, and the effect of the film is thus rather different from The Truman Show, though it is equally curious: Blade Runner may recycle earlier styles or narrative forms in a manner typical of retro, but the films that it mimics are themselves all more or less dystopian. If Blade Runner is a pastiche, it is a pastiche of other dystopias, and this has the effect of establishing the correlation between retro and the apocalypse in a distinctive way: Blade Runner posits a historical continuum between a bleak past and an equally bleak future, between the corrupt and stratified modernist city (of German expressionism and hardboiled fiction) and the coming reign of corporate capital (envisioned by so much science fiction), between the bad world we’ve survived and the bad world that awaits.

Such, then, are the films that seem ready to make Jameson’s argument for him. But there is good reason, I think, to set Jameson temporarily to one side. For present purposes, it would be more revealing to direct our attention back to Delicatessen, which, of all the retro-apocalypses, is perhaps the most winning and Benjaminian. The question that confronts any viewer of Delicatessen is why this film—which, after all, depicts an utterly dismal world in which men and women are literally butchered for meat—should be so delightful to watch, and not just wry or darkly humorous, but giddy and dithyrambic. I would suggest that the pleasure peculiar to Delicatessen has everything to do with the status of objects in the film—that is, with the extravagant and festive care that Jeunet and Caro bring to the filming of objects, which take on the appearance here of so many found and treasured items. One might call to mind the hand-crank coffee grinder, which doubles as a radio transmitter; or the cherry-red bellboy’s outfit; or simply the splendid opening credits—this slow pan over broken records and torn photographs—in which the picture swings open like a case of curiosities. It is as though the film took as its most pressing task the re-enchantment of the object-world, as though it were going to lift objects to the camera one by one and reattach to them their auras—not their fetishes, now, as happens in most commercial films, with their product placements and designer outfits—but their auras, as though the objects at hand had never passed through a marketplace at all. This is tricky: The objects in Delicatessen are recognizably of the same type as American retro-commodities—an antique wind-up toy, an old gramophone, stand-alone black-and-white television sets. At this point, then, the argumentative alternatives become clear: Either we can dismiss Delicatessen as ideologically barren, as just another pretext for retro-consumption, just another flyer for the flea market of postmodernism. Or we can muster a little more patience, tend to the film a little more closely, in which case we might discover in Delicatessen the secret of all retro-culture: its desire, delusional and utopian in equal proportion, for a relationship to objects as something other than commodities.

To follow the latter course is to raise an obvious question: How does the film direct our attention to objects in a new way? How does it reinvigorate our affection for the object world? This is a question, first of all, of the film’s visual style, although it turns out that nothing all that unusual is going on cinematographically: In a manner characteristic of French art-film since the New Wave, Delicatessen keeps the spectator’s eye on its objects simply by cutting to them at every opportunity and thus giving them more screen time than household artifacts typically claim. By the usual standards of analytical editing, in other words—within the familiar breakdown of a scene into detailed views of faces, gestures, and props—the props get a disproportionate number of shots. The objects, like so many Garbos, hog all the close-ups. “By permitting thought to get, as it were, too close to its object,” Adorno once said of Benjamin’s critical method, “the object becomes as foreign as an everyday, familiar thing under a microscope.”[10] Delicatessen works, in these terms, by taking Adorno’s linguistic figure at face value and returning it back to something like its literal meaning, back to the visual. The film permits the camera to get too close to its object. It forces the spectator to scrutinize objects anew simply by bringing them into sustained proximity.

The camerawork, however, is just the start of it, for in addition to the question of cinematic style, there is the related question of form or genre. Delicatessen, it turns out, is playing a crafty game with genre, and it is through this formal frolic that the film most insistently places itself in the service of its objects. For Delicatessen is retro not only in its choice of props—it is, like Blade Runner, formally or generically retro, as well. This point may not be immediately apparent, however, since Delicatessen resurrects a genre largely shunned by recent U.S. film. One occasionally gets the feeling from American cinema that film noir is the only genre ripe for recycling. The 1990s have delivered a whole paddywagon full of old-fashioned crime stories and heist pics, but where are all the other classic Hollywood genres? Where are the retro-Westerns and the retro war movies? Where are the retro-screwballs?[11] Neo-noir, of course, is relatively easy to pull off—dim the lights and fire a gun and some critic or another will call it noir. Delicatessen, for its part, attempts something altogether more difficult or, at least, sets in motion a less reliable set of cinematic conventions: pratfalls, oversized shoes, madcap chase scenes. Early on, in fact, the film has one of its characters say that, in its post-apocalyptic world, people are so hungry they “would eat their shoes”; and with this one line—an unambiguous reference to the celebrated shoe-eating of Chaplin’s The Gold Rush—it becomes permissible to find references to silent comedy at every turn: in the hero’s suspenders, in the film’s several clownish dances, in the near-demolition of the apartment building in which all the action is set, a demolition that, once read as slapstick, will call to mind Buster Keaton’s wrecking-ball comedy, the crashing houses of Steamboat Bill, Jr. (1928), say. Delicatessen, in sum, is retro-slapstick, and noting as much will allow us to ask a number of valuable questions.

The most compelling of these questions will return us to the matter at hand. We are trying to figure out how Delicatessen gets the viewer to pay attention to its objects, and so the question now must be: What does slapstick have to do with the status of objects in the film? It is hardly intuitive, after all, that slapstick should bring about the redemption of objects, should reattach objects to their auras. A cursory survey of classic slapstick, in fact, might suggest just the opposite—a world, not of enchanted objects, but of aggressive and adversarial ones. Banana peels and cream pies spring mischievously to mind. And yet we need to approach these icons with caution, lest we take a conceptual pratfall of our own; for Delicatessen draws on slapstick in at least two different ways, or rather, it draws on two distinct trends in early American slapstick, and each of these trends grants a different status to its objects. Everything rides on this distinction:

1) When we think of slapstick, we think first of all of roughhouse comedy, of the pie in the face and the kick in the pants, the endless assault on ass and head. Classic slapstick of this kind is what we might call the comedy of Newtonian physics. It is a farce of gravity and force, and as such, it is based on the premise that the object world is fundamentally intransigent, hostile to the human body. In this Krazy-Kat or Keystone world, every brick, every mop is a tightly wound spring of kinetic energy, always ready to uncoil, violently and without motivation.[12] It is worth remarking, then, that Delicatessen, contains its share of knockabout: the Rube Goldberg suicide machines, the postman always tumbling down the stairs. In its most familiar moments, Delicatessen, in keeping with its comic predecessors, seems to suggest that the human body is irreparably out of joint with its environment.

A first distinction is necessary here, for though Delicatessen may embrace the sadism of slapstick, it does so with a historical specificity of its own. Classic slapstick typically addresses itself to the place of the body under urban and industrial capitalism; one is pretty much obliged at this point to adduce Chaplin’s Modern Times (1936), with its scenes of working-class mayhem and man-eating machines. Delicatessen, by contrast, contains man-eaters of its own, but they are not metaphorical man-eaters, as Chaplin’s machines are—they are cannibals true and proper, and their presence adds a certain complexity to the question of the film’s genre, for there have appeared so many films about cannibalism over the last twenty years that they virtually constitute a minor genre of their own.[13] One way to describe Delicatessen’s achievement, then, is to say that it splices together classic slapstick with the cannibal film. There will be no way to appreciate what this means, however, until we have determined the status of the cannibal in contemporary cinema. Broadly speaking, images of the cannibal tend to participate in one of two discourses: Historically, they have played a rather repugnant role in the racist repertoire of colonial clichés. Cannibalism is one of the more extreme versions of the imperial Other, the savage who does not respect even the most basic of civilization’s taboos. Increasingly, however, in films such as Eat the Rich (1987) or Dawn of the Dead (1978), cannibalism has become a conventional (and more or less satirical) image of Europeans and Americans themselves—an image, that is, of consumerism gone awry, of a consumerism that has liquidated all ethical boundaries, that has sunk into raw appetite, without restraint.[14] For present purposes, this point is nowhere clearer than in Delicatessen’s final chase scene, in which the cannibalistic tenants of the film’s apartment house gather to hunt down the film’s hero. The important point here is that, within the conventions of classic Hollywood comedy, the film makes a conspicuous substitution, for our comic hero is not on the run from some exasperated factory foreman or broad-shouldered cop on the beat, as silent slapstick would have it. He is fleeing, rather, from a consumer mob, E.P. Thompson’s worst nightmare, some degraded, latter-day bread riot. It is important that we appreciate the full ideological force of this switchover: By staffing the old comic scenarios with kannibals instead of kops, the film is able to transform slapstick in concrete and specifiable ways. The cannibals mean that when Delicatessen revives Chaplin-era slapstick, it does so without Chaplin’s factories or Chaplin’s city. This is slapstick for some other, later stage of capitalism—modernist comedy from which modernist industry has disappeared, leaving only consumption in its place.

2) Slapstick, then, announces a pressing political problem, in Delicatessen as in silent comedy. It sounds an alarm on behalf of the besieged human body. Delicatessen’s project, in this sense, is to imagine that problem’s solution, to mount a counterattack, to ward off the principle of slapstick by shielding the human body from its batterings. The deranged, consumption-mad crowd, in this light, is one, decidedly sinister version of the collective, but it finds its counterimage here in a second collective, a radical collective—the vegetarian insurgency that serves as ethico-political anchor to the film. Or to be more precise: The film is a fantasy about the conditions under which an advanced consumer capitalism could be superceded, and in order to do so, it follows two different tracks: One of the film’s subplots follows the efforts of the anti-consumerist underground, the Trogolodytes, while a second subplot stages a fairly ordinary romance between the clown-hero and a butcher’s daughter. Delicatessen thus divides its utopian energies between the revolutionary collective, depicted here as some lunatic version of La Resistance, and the heterosexual couple, imagined in impeccably Adornian fashion as the last, desperate repository of human solidarity, the faint afterimage of a non-instrumental relationship in a world otherwise given over to instrumentality.[15]

But this pairing does not exhaust the film’s political imagination, if only because knockabout does not exhaust the possibilities of slapstick. Delicatessen, in fact, is more revealing when it refuses roughhouse and shifts instead into one of slapstick’s other modes. Consider the key scene, early in the film, when the clown-hero, who has been hired as a handyman in the cannibal house, hauls out a bucket of soapy water to wash down the stairwell. The bucket, of course, is another slapstick icon, and anyone already cued in to the film’s generic codes might be able to predict how the scene will play out. Classic slapstick would dictate that the hero’s foot get wedged inside the bucket, that he skid helplessly across the ensuing puddle, that the mop pivot into the air and crack him in the forehead, that he somersault finally down the stairs. The important point, of course, is that no such thing happens. The clown does not get his pummeling. On the contrary, he uses his cleaning bucket to fill the hallway of this drear and half-inhabited house with giant, wobbling soap-bubbles, with which he then dances a kind of shimmy. It is in this moment, when the film pointedly repudiates the comedy of abuse, that the film modulates into a different tradition of screen comedy, what Mark Winokur has called “transformative“ or “tramp” comedy.

The hallway scene, in other words, is Chaplin through and through. It is important, then, to specify the basic structure of the typical Chaplin gag—and to specify, in particular, what distinguishes Chaplin from the generalized brutality and bedlam of the Keystone shorts. Chaplin’s bits are so many visual puns: they work by taking an everyday object and finding a new and exotic use for it, turning a roast chicken into a funnel, or a tuba into an umbrella stand, or dinner rolls into two dancing feet.[16] In Delicatessen, such transformative comedy is apparent in the New Year’s Eve noisemaker that the frog-man uses as a tongue, to catch flies; or in the hero’s musical saw, which, in fact, is the very emblem of the film’s many objects—an implement liberated from its pedestrian uses, a tool that yields melody, a dumb commodity suddenly able to speak again, and not just to shill, but to murmur of new possibilities. It is in transformative comedy, then, in the spectacle of objects whose use has been transposed, that slapstick takes on a utopian function. Slapstick becomes, so to speak, its own solution: Knockabout slapstick, in which objects are perpetually in revolt against the human body, finds its redemption in transformative slapstick, in which the human body discovers a new and unexpected affinity with objects. The pleasure that is distinctive of Delicatessen is thus actually some grand comic version of Kant’s aesthetics, of Kant’s Beauty, premised as it is on the dawning and grateful realization that objects are ultimately and against all reasonable expectation suited to human capacities. Delicatessen reimagines the world as a perpetual pas de deux with the inanimate.[17]

Transformative slapstick, this is all to say, functions in Delicatessen as a kind of antidote to cannibalistic forms of consumption. At its most schematic, the film faces its viewers with a choice between two different ways of relating to objects: a cannibalistic relationship, in which the object will be destroyed by the consumer’s unchecked hunger, or a Chaplinesque relationship, in which the object will be kept alive and continually reinvented. And so at a moment when cinematic realism has fallen into a state of utter disrepair, when realism finds it can do nothing but script elegies for the working class—when even fine films like Ken Loach’s Ladybird Ladybird (1994) and Zonca’s Dream Life of Angels (1998) have opted for the funereal, with so much as the protest drubbed out of them—it falls to Delicatessen’s grotesquerie to fulfill realism’s great utopian function, to keep faith, as Bazin said, with mere things, “to allow them first of all to exist for their own sakes, freely, to love them in their singular individuality.”[18]

It is crucial, however, that we not confine this observation to Delicatessen, because in that film’s endeavor lies the buried aspiration of all retro-culture, even (or especially) at its most fetishistic. If you examine the signs that hang next to the objects at Restoration Hardware and other such retro-marts—these small placards that invent elaborate and fictional histories for the objects stacked there for sale—you will discover a culture recoiling from its commodities in the very act of acquiring them, a culture that thinks it can drag objects back into the magic circle if only it can learn to consume them in the right way. Retro-commodities utterly collapse our usual Benjaminian distinctions between the fetish and the aura, and they do so by taking as their fundamental promise what Benjamin calls “the revolutionary energies that appear in the ‘outmoded,’” the notion that if you know the history of an item or if you can aestheticize even the most ordinary of objects—a well-wrought dustpan, perhaps, or a chrome toaster—then you are never merely buying an object; you are salvaging it from the sphere of circulation, and perhaps even from the tawdriness of use.[19]

This is not yet to say enough, however, because it is the achievement of Delicatessen to demonstrate that this retro-utopia is unthinkable without the apocalypse. For if the objects in Delicatessen achieve a luminosity that is denied even the most exquisite retro-commodities, then this is only because they occupy a ruined landscape, in which they come to seem singular and irreplaceable. Delicatessen is a film whose characters are forever scavenging for objects, scrapping over parcels that have gone astray, rooting through the trash like so many hobos or German Greens. It is the film’s fundamental premise, then, that in a time of shortage, and in a time of shortage alone, objects will slough off their commodity status. They will crawl out from under the patina of mediocrity that the exchange relationship ordinarily imposes on them. If faced with shortage, each object will come to seem unique again, fully deserving of our attention. There is a startling lesson here for anyone interested in the history of utopian forms: that utopia can require suffering, or at least scarcity, and not abundance; that the classical utopias of plenty—those Big Rock Candy mountains with their lemonade springs and cigarette trees and smoked hams raining from the sky—are, under late capital, little more than hideous afterimages of the marketplace itself, spilling over with redundant and misdistributed goods, stripped of their revolutionary energy; that a society of consumption must, however paradoxically, find utopia in its antithesis, which is dearth.[20] And so we come round, finally, to my original point: that we must have, alongside Jameson, a second way of positing the identity of retro-culture and the apocalypse, one that will take us straight back to Benjamin: Underlying retro-culture is a vision of a world in which commodity production has come to a halt, in which objects have been handed down, not for our consumption, but for our care. The apocalypse is retro-culture’s deepest fantasy, its enabling wish.

[1] Jameson’s full comments can be found in the London Review of Books (Volume 23, Number 19, October 4, 2001). See also “Architecture and the Critique of Ideology, in The Ideologies of Theory, Volume 2: The Syntax of History, pp. 35-60, esp. p. 41: “dialectical interpretation is always retrospective, always tells the necessity of an event, why it had to happen the way it did; and to do that, the event must already have happened, the story must already have come to an end.”

[2] This essay is available in multiple versions. The easiest to come by is perhaps “Postmodernism and Consumer Society,” in The Cultural Turn (London: Verso, 1998), pp. 1-20; and the most densely argued “The Cultural Logic of Late Capitalism” in Postmodernism, or The Cultural Logic of Late Capitalism (Durham: Duke, 1991), pp. 1-54.

[3] The Seventh Sign, for what it’s worth, draws on at least four different genres: 1) It is, at the most general level, a Christian apocalypse narrative; its nominal subject is the End Time, the series of catastrophes set in motion by God in preparation for His final judgment. 2) But in doing so, it deploys most of the conventions of the occult horror film. Even though the film expressly states that God is responsible for the disasters depicted, it cannot help but stage those disasters as supernatural and scary, in sequences borrowed more or less wholesale from the exorcism and devil-child movies of the 1970s, which is to say that viewers are expected to experience God’s actions as essentially diabolical. The film may adorn itself with Christian trappings, but in a manner typical of the Gothic, it cannot, finally, represent religion as anything but frightening. 3) This last point is clearest in the film’s depiction of Jesus Christ, who actually appears as a character and is almost always filmed in shots lifted from serial-killer films—Jesus stands alone, isolated in ominous medium long-shots, his face half in shadow, lit starkly from the side. Jesus’ menace is also a plot point: Christ, in the film, rents a room from Demi Moore and, in a manner that recalls Pacific Heights (1990) or The Hand That Rocks the Cradle (1992), becomes the intruder in the suburban home, the malevolent force that the white professional family has mistakenly welcomed under its roof. 4) In its final logic, then, the film reveals itself to be just a disaster movie in disguise: The Apocalypse must be scuttled. Christ must be sent back to heaven (and thus evicted from the suburban home). Justice must be averted.

[4] I owe this point to a conversation with Roger Beebe. Even here, though, matters are more complicated than they at first seem. Hip-hop, after all, hardly dispenses with irony and pastiche altogether: Jay-Z has sampled “It’s a Hard-knock Life” (from Annie) and Missy Elliot has sampled Mozart’s Requiem, but no-one is likely to suggest that hip-hop is establishing a genetic link back to the Broadway musical or Viennese classicism.

[5] Of course, as a nationalist project, retro will play out differently in different national contexts. Perhaps a related cinematic example will make this clear. Consider Jeneut’s Fabuleux destin d’Amélie Poulain (2001). At the level of diagesis—as a plain matter of plot and dialogue and character—the film has nothing at all to do with nationalism. On the contrary, it dedicates an entire subplot to undermining the provincialism of one of its characters, Amélie’s father, who resolves at movie’s end to become more cosmopolitan. The entire film is directed towards getting him to leave France. But at the level of form, things look rather different. Formally, the film is retro through and through. It won’t take a cinephile to notice the overt references to Jules et Jim (1962) and Zazie dans le Metro (1960), at which point it becomes clear that Amélie is a pastiche of the French New Wave, which is thereby transformed into a historical artifact of its own. Amélie, then, attempts to recreate the nouvelle vague, not with an eye to making it vital again as an aesthetic and political project, but merely to cycle exhaustively through its techniques, its stylistic tics, as though it were compiling some kind of visual compendium. The nationalism that the film’s narrative explicitly rejects thus reappears as a matter of form. Amélie works to draw our attention to the Frenchness of the New Wave, to codify it as a national style, and the presumed occasion for the film is therefore the ongoing battle, in France, over the Americanization of la patrie. Amélie is a bulldozer looking for its MacDonald’s.

[6] See Jameson’s “The Antinomies of Postmodernism,” in The Cultural Turn, pp. 50-72, quotation p. 50.

[7] See “The Cultural Logic of Late Capitalism,” in Postmodernism or, The Cultural Logic of Late Capitalism (Durham: Duke, 1991), pp. 1-54, quotation p. 46.

[8] The second quotation cited here goes on to make this point clear: Retro-culture, Jameson continues, “abandon(s) the thinking of future change to fantasies of sheer catastrophe and inexplicable cataclysm, from visions of ‘terrorism’ on the social level to those of cancer on the personal.”

[9] The Truman Show, to be fair, does hedge the matter somewhat. The film’s numerous cutaways to the show’s viewers show a “real world” that is itself populated by TV-thralls, Truman Burbanks of a lower order. So when Truman steps out of his videodrome, we have a choice: We can either conclude, in proper Lacanian fashion, that Truman has simply traded one media-governed pseudo-reality for another. Or we can conclude that the film is asking us to distinguish between those, like Truman, who are able to shrug off their media masters, and those, like his viewers, who aren’t. I take this to be the film’s constitutive hesitation, its undecideable question.

[10] See Adorno’s “Portrait of Walter Benjamin” in Prisms, translated by Samuel and Shierry Weber (Cambridge: MIT, 1981, pp. 227-241), here p. 240.

[11] Examples of these last can be found, but it takes some looking: Paul Verhoeven’s Starship Troopers is a retro World War II movie, more so than Pearl Harbor (2001) or Saving Private Ryan (1998), which aspire to be historical dramas; and the Coen brothers’ Hudsucker Proxy (1994) is unmistakably a retro-screwball (and such a lovely thing that it’s a wonder others haven’t followed its lead). But they are virtually the lone examples of their kinds, singular members of non-existent sets. Neo-noir, by contrast, has become too extensive a genre to list comprehensively.

[12] Perhaps a rare instance of literary slapstick, manifestly modeled on cinematic examples, will drive this point home. The following is from Martin Amis’s Money (London: Penguin, 198?), p. 289: “What is it with me and the inanimate, the touchable world? Struggling to unscrew the filter, I elbowed the milk carton to the floor. Reaching for the mop, I toppled the trashcan. Swivelling to steady the trashcan, I barked my knee against the open fridge door and copped a pickle-jar on my toe, slid in the milk, and found myself on the deck with the trashcan throwing up in my face … Then I go and good with the grinder. I took the lid off too soon, blinding myself and fine-spraying every kitchen cranny.”

[13] See, for instance, Eating Raoul (1982); Parents (1989); The Cook, The Thief, His Wife, and Her Lover (1989); and, in a different mood, Silence of the Lambs (1991) and Hannibal (2001).

[14] On the cultural uses of cannibalism, see Cannibalism and the Colonial World, edited by Francis Barker, Peter Hulme, Margaret Iversen (Cambridge: Cambridge, 1998), especially Crystal Bartolovich’s “Consumerism, or the cultural logical of late cannibalism” (pp. 204-237).

[15] For a discussion of Delicatessen that pays closer attention to the film’s narrowly French contexts—its nostalgia for wartime, its debt to French comedies—see Naomi’s Greene’s Landscapes of Loss: The National Past in Postwar French Cinema (Princeton: Princeton, 1999).

[16] See, respectively, Modern Times; The Pawnshop (1916); The Gold Rush (1925).

[17] There’s a sense in which this operation is at work even in the most vicious knockabout. Even the most paradigmatically abusive comedies—the Keystone shorts, say—are redemptive in that the staging of abuse itself discloses a joyous physical dexterity. The staging of bodies out of synch with the inanimate world relies on bodies that are secretly very much in synch with that world—and this small paradox characterizes the pleasure peculiar to those films.

[18] Bazin, What is Cinema?, translated by Hugh Gray (Berkeley: UCalifornia, 1967); see also Siegfried Kracauer’s Theory of Film: The Redemption of Physical Reality (New York: Oxford, 1965).

[19] See Benjamin’s “Surrealism: The Last Snapshot of the European Intelligentsia,” translated by Edmund Jephcott in the Selected Writings: Volume 2, 1927-1934, edited by Michael Jennings, Howard Eiland, and Gary Smith (Cambridge: Belknap, 1999, pp. 207-221), here p. 210.

[20] Compare Langle and Vanderburch’s utopia of abundance, as noted by Benjamin himself, in the 1935 Arcades-Project Exposé (in The Arcades Project, translated by Howard Eiland and Kevin McLaughlin—Cambridge: Belknap, 1999, pp. 3-13), here p. 7:

“Yes, when all the world from Paris to China

Pays heed to your doctrine, O divine Saint-Simon,

The glorious Gold Age will be reborn.

Rivers will flow with chocolate and tea,

Sheep roasted whole will frisk on the plain,

And sautéed pike will swim in the Seine.

Fricaseed spinach will grow on the ground,

Garnished with crushed fried croutons;

The trees will bring forth stewed apples,

And farmers will harvest boots and coats.

It will snow wine, it will rain chickens,

And ducks cooked with turnips will fall from the sky.”

(Translation altered)